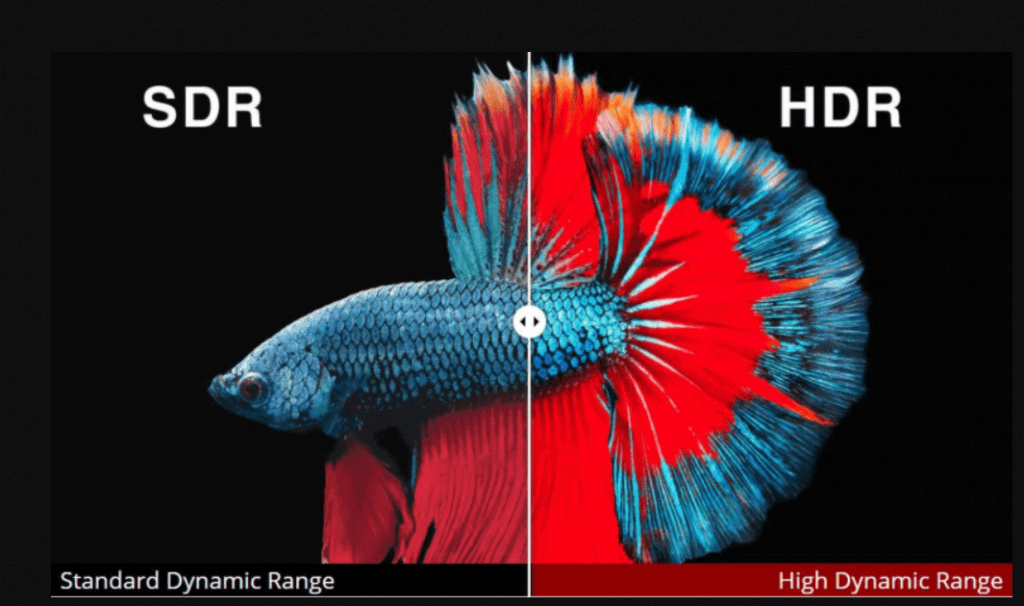

HDR either High Dynamic Range It consists of a technique that will improve the quality of our image through greater Dynamic range, that is, increasing how light and dark our screen can be. So we're going to have darker dark tones and much brighter light or bright tones.

But before continuing, you have to understand what the contrast and Dynamic range on a TV.

You can open this link and see the previous comparison at better quality

Post content:

Contrast & Dynamic Range

If we want to know if a TV is higher or lower range, one of the most important characteristics is contrast.

He contrast It is the difference between the brightest white and the darkest black that our screen can display.

It is usually indicated in a factor like this 3000:1, this means that the whitest white is 3000 times brighter than the darkest black, the higher this value the better since greater contrast results in brighter and more accurate colors.

He Dynamic range consists of adjusting the brightness in real time (hence it is dynamic), the content HDR has metadata (data that provides information to define other data) that indicates for each frame the level of brightness and therefore, indirectly, contrast, this allows the general quality to be higher since, unlike maintaining a brightness, it varies depending on the scene .

Many of today's 4K TVs have at least HDR10 and for this reason also much of the content produced also supports HDR either Dolby Vision, later we will talk about the different types of HDR.

The growing trend towards this technology has caused the main streaming platforms to begin to provide support, for example in Prime Video We can see before watching a movie or series if it supports HDR, if it does not indicate it it means that it does not support it.

Color range

A concept closely related to HDR is that of WCG (Wide Color Gamut), which means Wide Color Gamut.

This relationship occurs because in order for HDR to show better and more vivid colors, it is also almost essential to have a wider range of colors, so in the 90% of cases, a TV with HDR it will also be WGC.

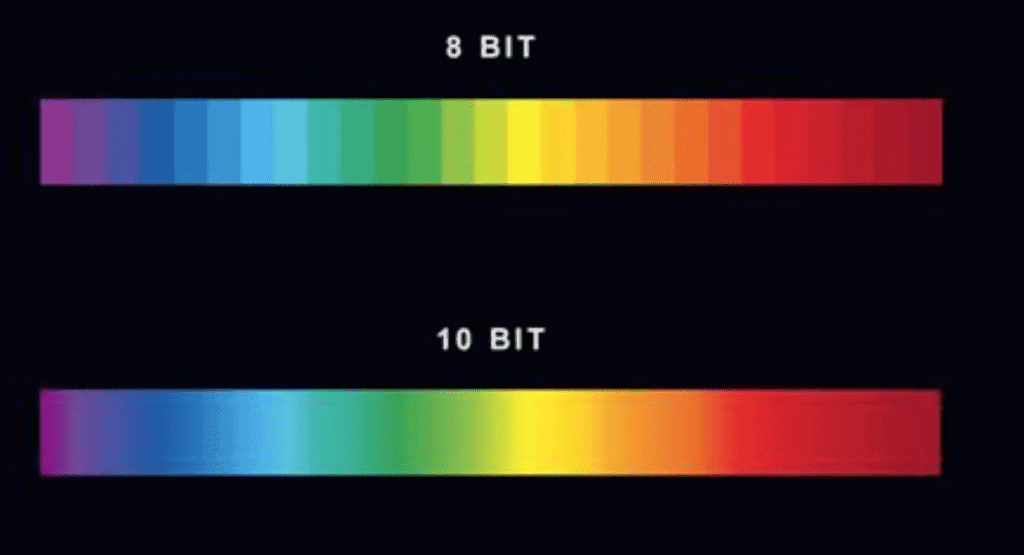

A screen capable of WGC or (Wide Color Gamut) will have a panel 10bit compared to the usual 8 bits, this feature is known as Bit Depth. To see this in numbers, an 8-bit panel can display a total of 16,777,216 colors versus the 1,073,741,824 colors of a 10-bit panel. We are talking about a jump from 16 million to a billion colors.

There are also 12 and 16 bit panels, but these are very difficult to find and little content can take advantage of 12/16 bits.

Brightness – A decisive point

Another point that we cannot forget when we talk about HDR is the brightness, as we mentioned at the beginning, HDR allows us to obtain better image quality thanks to increasing its range of dark, bright or white tones and offering more vivid colors, but for this can happen we will also need to have a minimum of brightness.

A large number of cheap TVs on the market offer figures between 300 and 400 cd/m², a number lower than the 600 cd/m² recommended to start having an adequate HDR experience, and certainly very far from 1000 cd/m² longed for by so many to now enjoy dazzling image quality.

But of course, as always this falls on the consumer's pocket, higher-end televisions have more brightness while the low-end televisions remain above the aforementioned figures of 300 cd/m².

Types of HDR

HDR10

This is the most basic type of HDR, is an open standard so any company can obtain this certification for free.

HDR10 It is not dynamic despite being HDR, but is static so when we go to watch a movie it is sent metadata which will indicate the set brightness and contrast values for the content to be played.

This type of HDR is not backward compatible with SDR. Supports up to a maximum of 1000 cd/m² and 10 bit color.

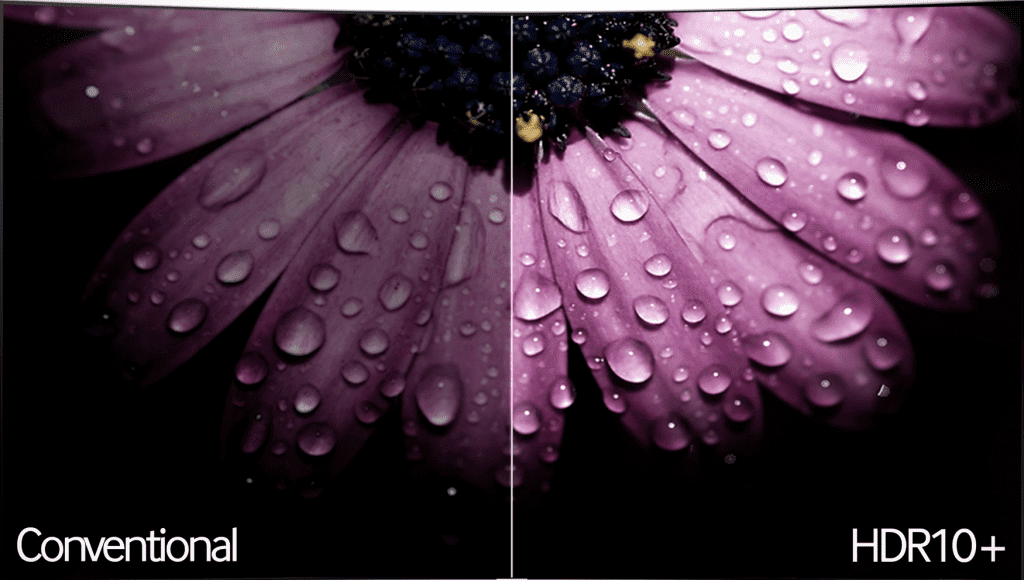

HDR10+

We are faced with an improved version of HDR10, This variant was designed by Samsung Starting with HDR10 with the aim of beating or at least equaling Dolby Vision while remaining an open standard.

If we talk about HDR10+ we must mention the theoretical maximum of 4000 cd/m² brightness, maintaining the maximum 10 color bits.

Although this version of HDR has not come close to matching Dolby Vision At least it offers an improvement over its younger brother, and it is also present in all UHD (4K) televisions. Samsung since 2017. However, it is not as widely used as HDR10 or Dolby Vision and not all streaming platforms (Netflix, Prime Video, Disney...) support it today.

Dolby Vision

Dolby Vision It is owned by Dolby, so if a company wants its television or monitor to be certified as compatible, they must pay a fee, in addition to having minimum requirements.

Dolby Vision employs metadata dynamically and has a theoretical maximum of 10,000 cd/m² compared to 1000 cd/m² of HDR10 and 4000 cd/m² of HDR10+.

Regarding color depth, while HDR/HDR10+ hit the limit at 10 bits, Dolby Vision allows up to 12 bits deep.

This type of HDR is still not a great advance over the others, since most of the high-quality content produced is in 10-bit color and the screens are far from the overwhelming limit of 4000 cd/m² of HDR10+.

HLG (Hybrid Log Gamma)

HLG or Hybrid Log Gamma It is the most different version of HDR, it solves the problem that HDR content is not backward compatible with SDR screens, which is why when viewing HDR content on a screen that does not support it, the colors look not very vivid. That's where it comes in HLG YES, it is compatible with SDR screens, thus preventing the content from looking worse.

This type of HDR does not require metadata, this also greatly increases its compatibility since it prevents the screen from being able to interpret the metadata receiving.

HLG allows you to encode both HDR and SDR information in a single signal, this translates into great savings in bandwitdh (bandwidth). For this reason it is mainly used in broadcasting, direct and for satellite television.

These three sectors mentioned are almost exclusively the only ones where this type of HDR is used, since the majority of producers decide to go for an HDR standard such as HDR10 or Dolby Vision and not HLG when recording content.

This HDR technology – HLG was designed by the BBC and Japan's NHK when both were looking for a solution to the bandwidth problem for television and live content.

Who is the winner?

We could not define a clear winner, although we can say that Dolby Vision It is a safer option thanks to its theoretical maximum of 10,000 cd/m² and 12 bits of color, which is why we can obtain a screen with Dolby Vision It is a better bet for the future.

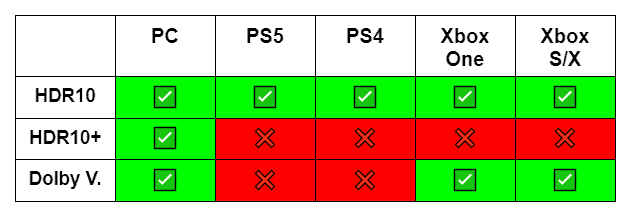

What we can directly compare is the compatibility of the different types of HDR in sectors such as gaming.