An artificial intelligence is not capable of learning about ethics, but every day we hear more things about artificial intelligences, and it seems that as the years progress we will continue to hear more and more news about them and their evolution. He experiment of The Moral Machine (Moral Machine) is a small step towards that scenario.

From bot control in video games, to smart homes or self-driving cars; but…What if one of these cars with artificial intelligence finds itself in an ethical dilemma, and has to choose between running over a pedestrian or saving its passengers? What decision would you make? Would you really know how to defend yourself in a situation like that, where there is no rational or mathematical solution, but only ethics and morality are involved?

You can answer those questions yourself through this link, and contribute your grain of sand to the experiment of The Moral Machine.

The answer is... that somehow those in charge of creating this artificial intelligence have had to take this ethical situation into account beforehand and program a behavioral routine for these extreme and specific cases. Although at the moment there are no such specific laws regarding road traffic with smart cars, there are people who have asked themselves these same ethical questions, and this is how the Moral Machine Experiment arose., created in 2016 by a team of 8 researchers who wanted to analyze people's opinions in these cases, to create what could be the first official document on the behavior that a smart car should follow in these extreme cases.

In this ethics and moral experiments, participants were forced to choose what they believed was correct in a wide variety of extreme situations, and in this way a large number of statistics were created about what people believed was the best solution. in each case. This study collected the results of more than 40 million decisions, in 10 different languages, involving people from 233 different countries and territories.

The experiment itself does not only measure the popular opinion of the millions of participants, but also analyzes many other factors such as gender, age, race, religion, culture and origin of each of these individuals.

Thus, the first document was formed that objectively and statistically collects the ethics and most appropriate behavior of an artificial intelligence for these types of extreme dilemmas, according to the average responses of participants from all over the world.

Post content:

Moral Machine: Artificial Intelligence facing an ethics dilemma

This moral machine experiment graphically shows the results of both decisions made globally and specific decisions made by sub-sets of people, differentiated especially according to the origin and ethnicity of each person or group of voters. In this way, we will first see the graphs on the votes at the global level, and then we will go on to analyze the votes and the moral decisions made depending on the cultural group and its origin.

Part 1: The most valuable attributes to survive an ethical and moral dilemma

Without further ado, we will see the conclusions and statistics finals of the "Moral Machine" experiment, approved in 2016 by the Massachusetts Institute of Technology (MIT), on the ethics and morals that a tumomobile artificial intelligence should follow.

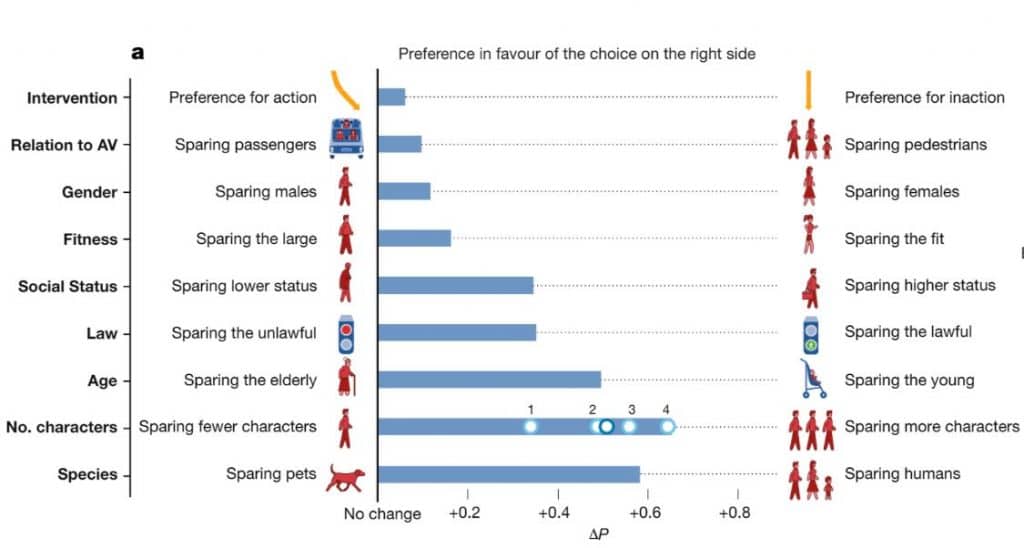

One of the most interesting results of the ethics experiment for artificial intelligence is the one we see in the image above, where the global preference to save is shown (sparing) to some people or others depending on two opposite characteristics.

In this case, the most notable trend is saving four people (or more) versus one, followed by saving humans versus animals. Thus, the most important factors when deciding who lives or who dies are: the species, the number of people and the age; followed not far behind by social status and road responsibility for each person's actions.

This is something important to keep in mind because it means that if four people cross a zebra crossing on red, the artificial intelligence that drives the car will give them preference over the life of its driver. Even so, the document does not specify whether the legality section refers only to road traffic laws, or would also include a record of the personal behavior of each individual regarding social laws.

On the other hand, other trends that stand out but to a much lesser extent are: giving priority to people with good physical condition, women, and pedestrians, although if we are given the choice it also seems that there are more people who would prefer to leave the car alone. Follow your course and let what destiny chooses happen.

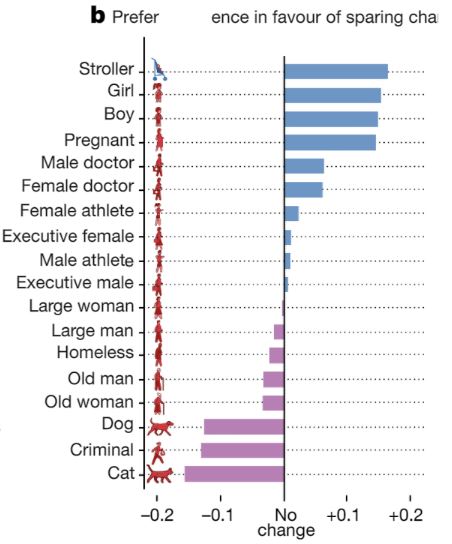

If we see a ranking of the most notable ethical preferences to reflect in an artificial intelligence, for a single individual and according to the assessment of these 40 million different situations, the table would look like this:

Where age and species undoubtedly play the most important role in deciding who survives. Even so, the most striking thing about this ranking is the fact that the life of a dog would be saved sooner than that of a criminal, although the same would not happen with dogs, who by far have the least probability of surviving in a moral dilemma such as this.

In descending order, the most suitable attributes for survival are: a baby, a girl, a boy, a pregnant woman, a doctor, an athlete or an executive. On the contrary, the attributes that would reduce your probability of survival are (in descending order) being: an animal, a criminal, an elderly person, a vagabond or someone with a poor or fair physical condition.

What do you think of this ranking? I hope to read your opinions in the comments.

Part 2: The responses of each set of people grouped by culture

The global calculation of decisions is very well reflected in the two images that we have seen in the first part of the article, however not all people have agreed on these 40 million situations, and a very determining factor in this has been culture: The individuals surveyed have shown a great variety of ways of acting according to their own morals and ethics, but almost all of those who have agreed had something in common: the culture and the area of the world in which they lived.

In this way, we will also briefly analyze which countries or which cultural and ethnic groups are more likely to make certain decisions when the only response is based on the morals and ethics of each one.

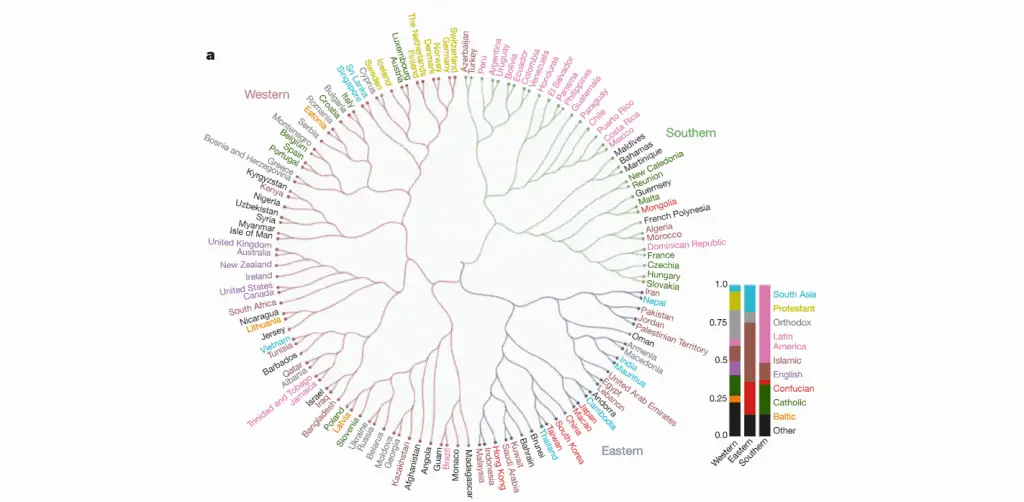

As can be seen, people from all over the world ventured to contribute to the experiment, and these groups of individuals have subsequently been divided into three categories with quite similar results:

- Green branches: Latin America, North Africa, and some European countries.

- Blue branches: Western countries that make up Asia, mostly.

- Maroon branches: North America, some countries in North Asia and almost all of Europe.

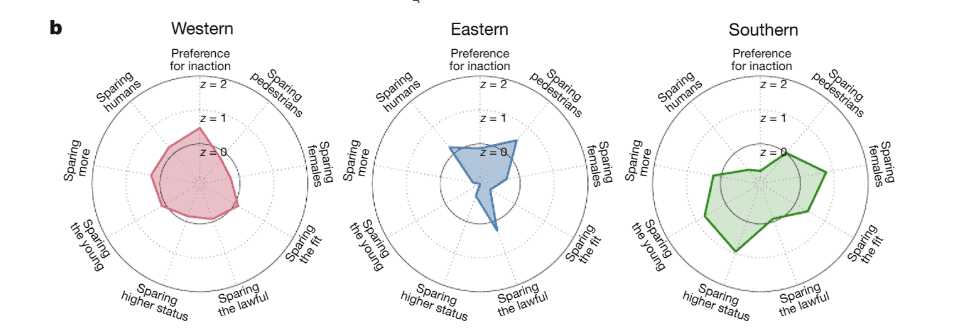

In this way we can then appreciate the decisions of each of these three groups of people, which were completely different despite the large sample of individuals and situations that were studied.

While the Western group maintains a more or less balanced opinion for each situation, in the group made up mainly of Latin American countries and the group made up of Asian countries, just the opposite occurs.

From what I understood when reading the article, these pie charts indicate the percentage of people who believe that taking that characteristic into account would be the solution. For example: in Latin American countries there would be a very marked tendency to save people because of their social status (sparing higher status), while it would be unclear whether it would be better to act or leave the car on its course (preference for inaction), since this characteristic is close to zero.

In this way, a great contrast between cultures can be observed, especially between Latin individuals and Western individuals.

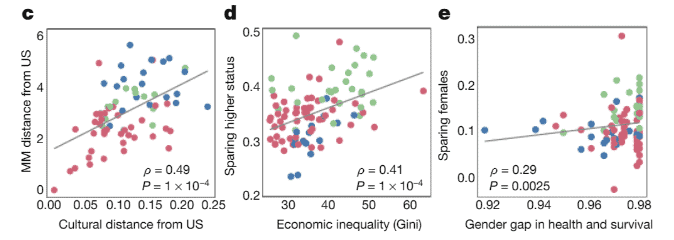

To finish, in the following graphs we can see another small amount of interesting data, such as the fact that countries with better laws are much more in favor of saving people according to their behavior with society, or that countries with greater inequality economic are those who give the most importance to saving people according to their social status.

Conclusion – Moral Machine Experiment

Although the Moral Machine may seem macabre for considering all these ethical and moral dilemmas, there is not much left until it is necessary to implement an action protocol similar to this one in the artificial intelligences in charge of motor racing.

On the other hand, it also seems that this ethical protocol would have to be personalized for each personal group, since the different social and ethical norms in each culture vary so much that it could be contradictory for some countries to apply a global protocol.

What do you think about this? Do not hesitate to leave a comment with your opinion or highlighting any information that you think is incorrect or can be improved.